Riot will start eavesdropping on toxic Valorant players to issue bans

Riot Games released a report today describing the current state of anti-toxicity measures in Valorant, as well as the future of such efforts, including a beta program to record and analyze the voice comms of players reported for abusive chat.

Riot’s terms of service were updated to accommodate this change last year, and Riot will begin a beta rollout of their voice moderation program within 2022. The report was light on details, but the recording and analysis of voice comms for moderation is supposedly only to be used when a player has already been reported for toxic behavior.

I would hope to hear more specifics on the program ahead of its implementation, like how many times players will have to be reported before being surveilled, as well as whether the punishment and appeal process will differ from the anti-toxicity programs Riot already has in place.

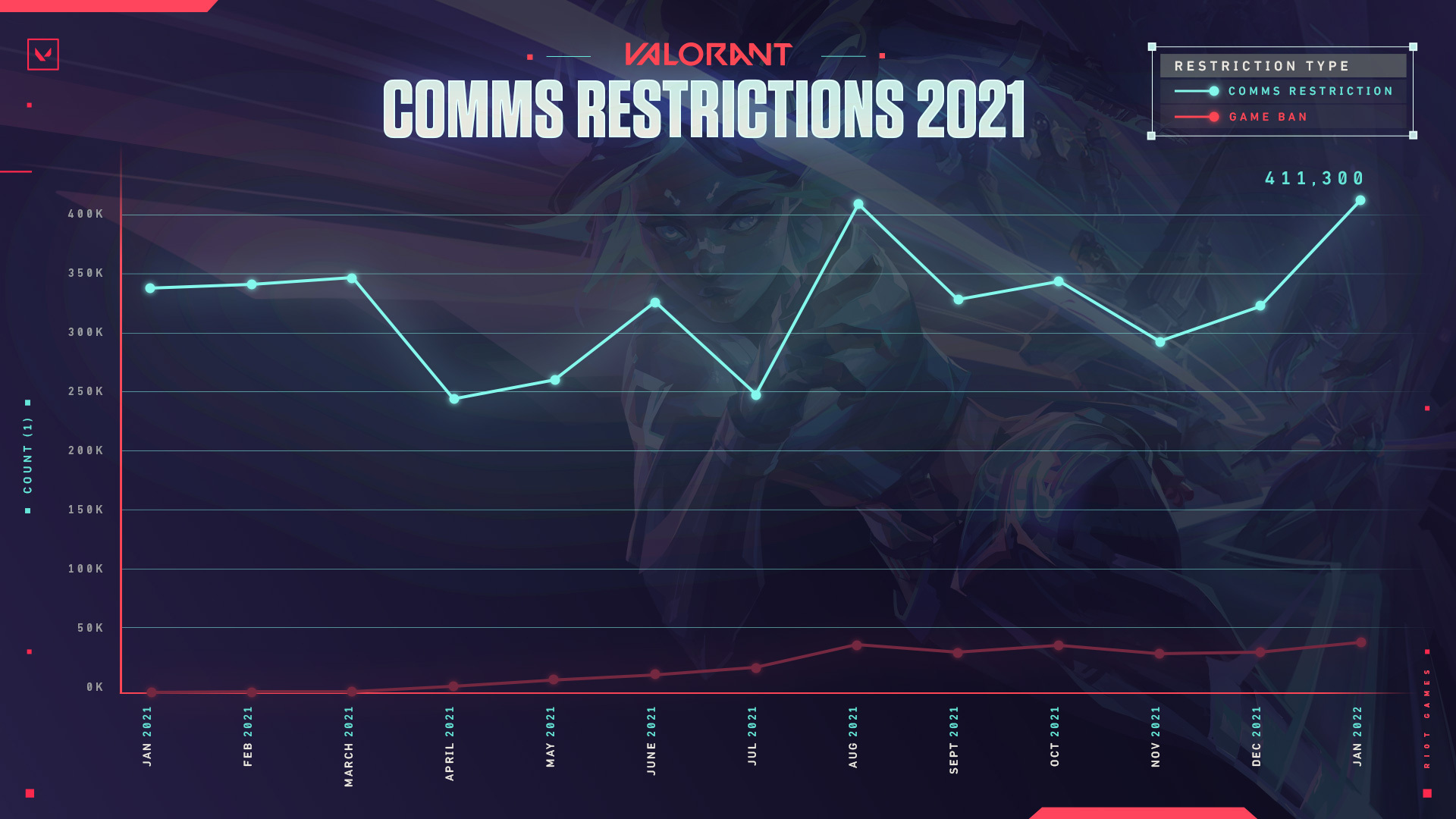

The main body of the report was dedicated to outlining Valorant’s current system for muting offensive words in text chat, the essential nature of player reporting to positively impact the game, and the results of these systems as reflected by the rate of bans and comms restrictions.

Interestingly, even though punishments are on the rise, Riot’s player surveys show that the perception of harassment in Valorant remains steady. In Riot’s own words: “…we noticed that the frequency with which players encounter harassment in our game hasn’t meaningfully gone down. Long story short, we know that the work we’ve done up to now is, at best, foundational, and there’s a ton more to build on top of it in 2022 and beyond.” I was impressed that Riot would admit to this discrepancy instead of just citing the increased rate of moderation as a win.

(Image credit: Riot Games)

The report went on to describe some plans for the immediate future of Valorant’s anti-toxicity systems:

“Generally harsher punishments for existing systems: For some of the existing systems today to detect and moderate toxicity, we’ve spent some time at a more “conservative” level while we gathered data (to make sure we weren’t detecting incorrectly). We feel a lot more confident in these detections, so we’ll begin to gradually increase the severity and escalation of these penalties. It should result in quicker treatment of bad actors.

More immediate, real-time text moderation: While we currently have automatic detection of “zero tolerance” words when typed in chat, the resulting punishments don’t occur until after a game has finished. We’re looking into ways to administer punishments immediately after they happen.

Improvements to existing voice moderation: Currently, we rely on repeated player reports on an offender to determine whether voice chat abuse has occurred. Voice chat abuse is significantly harder to detect compared to text (and often involves a more manual process), but we’ve been taking incremental steps to make improvements. Instead of keeping everything under wraps until we feel like voice moderation is “perfect” (which it will never be), we’ll post regular updates on the changes and improvements we make to the system. Keep an eye out for the next update on this around the middle of this year.

Regional Test Pilot Program: Our Turkish team recently rolled out a local pilot program to try and better combat toxicity in their region. The long and short of it is to create a reporting line with Player Support agents—who will oversee incoming reports strictly dedicated to player behavior—and take action based on established guidelines. Consider this very beta, but if it shows enough promise, a version of it could potentially spread to other regions.”

All in all, I read this report as a positive thing. I do get uneasy at the thought of voice recording and analysis being used against players, but games like Valorant are inherently social projects that are already subject to data gathering and surveillance. When I think of the truly vile things I’ve heard while playing some competitive games, well, it makes me appreciate any sort of effort at curbing that behavior.

With the discussion of toxicity and harassment in one of Riot’s games, I feel I have to point out the elephant in the room of the company’s own checkered past with its internal culture, with allegations of sexual harassment and abuse at the company. Riot settled the 2018 gender discrimination lawsuit against it for $100 million at the end of last year, and the company publicly maintains it has turned a corner. The harassment suit filed against Riot CEO Nicolo Laurent by his former assistant remains open, while an internal Riot investigation into his conduct found no wrongdoing.