What you need to know about HDR for PC gaming

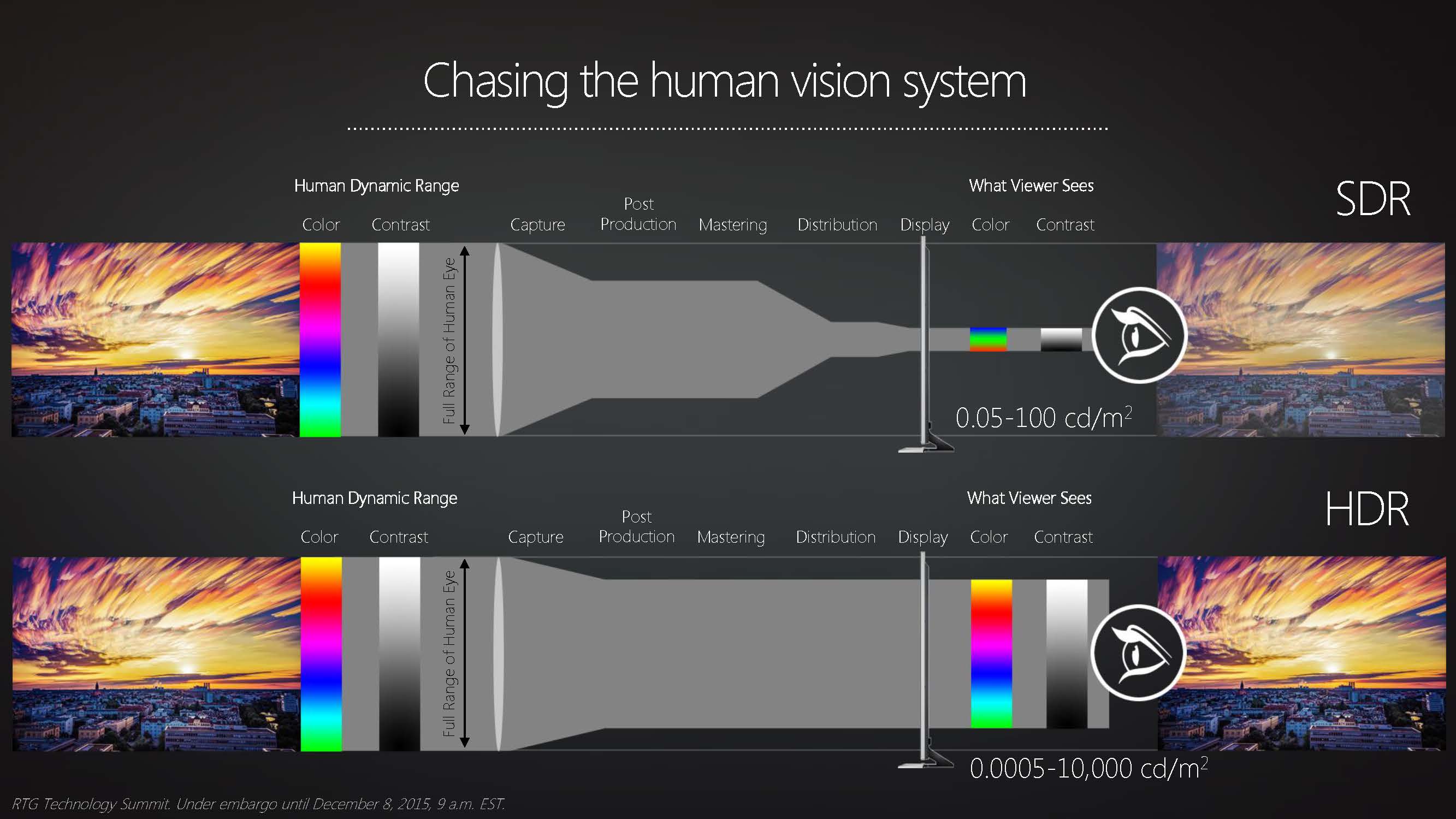

HDR stands for High Dynamic Range, and what it means when you see it plastered on a gaming monitor, TV, or game is that they support a wider and deeper range of color and contrast than SDR, or Standard Dynamic Range. Despite a slow uptick in support on PC, HDR has gradually become quite pervasive in PC gaming, and you can now enjoy heaps of games with HDR enabled.

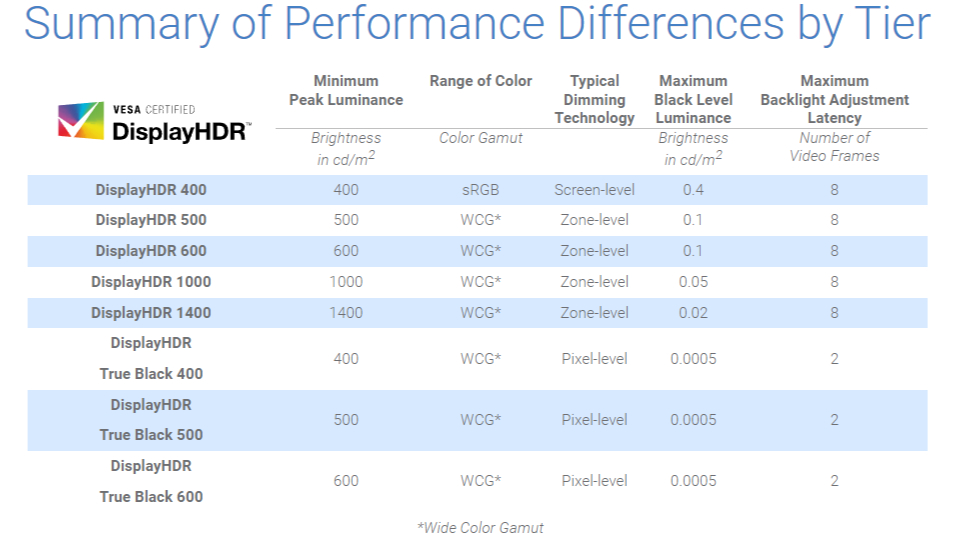

There is some bad news, though. HDR isn’t a one-and-done sort of standard. In fact, there are loads of levels to HDR and how good it looks depends on your monitor or TV’s capabilities. Things were made a little clearer for the customer with the introduction of the DisplayHDR standard from VESA, which tries to offer an easily identifiable grading scale for customers, though there’s still a lot more going on behind the scenes than these specifications let on.

Hardware HDR goes far beyond the simulated HDR found in older games like Half-Life 2. What is HDR?

HDR, or High Dynamic Range, is an umbrella term for a series of standards designed to expand the color and contrast range of video displays far beyond what current hardware can produce. Despite what you may have heard during the headlong push to 4K, resolution is not top dog when it comes to image quality alone.

Contrast, brightness, and vibrant color all become more important to image quality once resolution needs are met, and improving these is what HDR is all about. It’s not an incremental upgrade either; HDR’s radical requirements will mean new hardware for almost everyone and a difference you don’t need a benchmark or trained eyes to perceive.

Luminance is measured in cd/m2, and you’ll tend to see monitors offer a sliding scale from 100–1500 cd/m2 peak luminance. Don’t worry if you see luminance measured in nits, as that’s another unit to describe luminance that’s used interchangeably with cd/m2.

Similarly, you might find luminance and brightness used interchangeably, which is roughly fine though they are technically different. In terms of how much an object emits light, the best metric for that is luminance, though our perception of that is the object’s brightness.

HDR specs should really require a minimum 1000 cd/m2 for LCD screens, but you’ll find the DisplayHDR 400 standard tops out at 400 cd/m2. That’s not really much brighter than most SDR panels, and in fact some SDR gaming monitors were in the 300/400 cd/m2 range long before DisplayHDR was around. If you really want to experience HDR at its fullest, you’ll want to look past DisplayHDR 400 and to much greater brightness levels.

Luminance explained.

Good laptops may fare better with HDR content, since they can regularly push brighter than your cheap gaming monitor. However, some cheap laptops really don’t offer much luminance at all. Phones are surprisingly capable of high brightness, though usually in the name of readability in bright sunlight. Some reach just under 1000 nits, such as the Samsung S21 Ultra.

When it comes to luminance, though, HDR-compliant OLED screens often leave all these displays in the dark. That’s largely down to an OLED’s fundamental construction: an OLED display emits visible light without a backlight, which is required in an LCD panel. That means that contrast of an OLED panel can far exceed that of an LCD, even an LCD with very localised backlighting, and that makes an OLED great for HDR content.

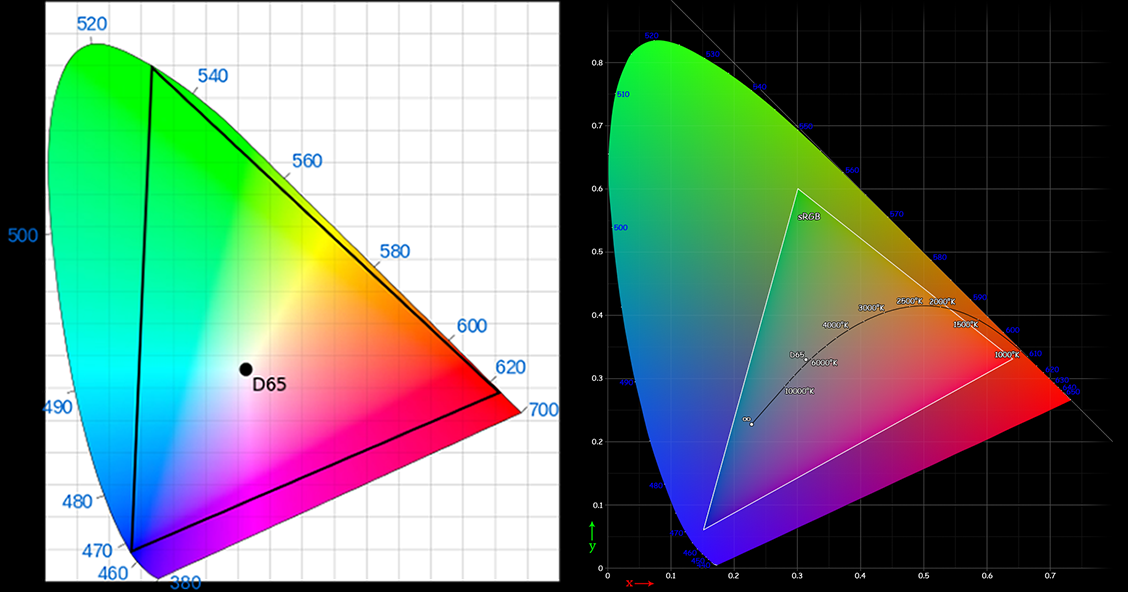

Colour also gets a makeover with HDR specs requiring a full 10- or 12-bit colour space per channel, which is fully accessible across the OS and managed via a set of active standards. Most PC displays only provide 6- or 8-bit colour per channel using a subset of the full colour space called sRGB, which covers a tiny third of HDR’s visual spectrum. However, even when the hardware is available to do more, software peculiarities make using legacy enhanced colour modes cumbersome.

sRGB, on the right, provides just a third of colors available to HDR.

Currently, PC monitors that support wide gamut color, or WGC, generally reserve compatibility for professional use, such as image processing or medical research applications. Games and other software simply ignore the extra colors and often wind up looking misadjusted when the reduced color space they use is mapped onto wide-gamut displays, unless the hardware takes special steps to emulate the reduced color space.

HDR standards avoid the confusion by including metadata with the video stream that helps manage the color space properly, making sure applications look correct and take optimal advantage of the improved display capabilities. To help handle all the extra data, some HDR variants usher in HDMI 2.0a or HDMI 2.1 as a minimum display connector requirement; a long overdue upgrade on the ubiquitous low-bandwidth HDMI 1.4 standard.

What HDR standards should I look for?

Most of the time it comes down to two: the proprietary Dolby Vision, which features 12-bit color and dynamic metadata; and the open standard HDR10, which supports 10-bit color and only provides static metadata at the start of a video stream.

Most gaming hardware manufacturers and software studios first turned to HDR10, as Dolby Vision, with its license fees and extra hardware, is the more expensive implementation which has slowed its adoption. Microsoft supports HDR10 with its Xbox Series X/S as does Sony for the PlayStation 5. Some older consoles also support HDR10.

However, Microsoft recently released Dolby Vision Gaming support on the Xbox Series X and Series S with more than 100 HDR titles available or coming soon on the platform.

When it comes to PC gaming, the hardware support is often there, but it comes down to per-game support. Most PC HDR titles support HDR10, and only a few support Dolby Vision. One such game is Mass Effect: Andromeda, if you want to try Dolby Vision out for yourself.

Dolby Vision is often seen as the superior choice, but you’ll really need a great HDR gaming monitor to get the most out of it. Proponents of Dolby Vision tout its greater colour depth, more demanding hardware standards, and frame-by-frame ability to adjust content on a dynamic basis, along with its HDR10 compatibility. Though gaming is moving towards the cheaper, good-enough standard of HDR10 by itself.

Since HDR10 uses static data, very bright or dark content means trouble rendering the occasional scenes shot at the other end of the spectrum, which can appear either murky or blasted out. HDR10+ adds Dolby Vision-style dynamic metadata and eliminates this problem, and it’s now the default variant of this for the HDMI 2.1 standard. HDR10+ also keeps the open source model that made HDR10 so easy to for manufacturers to adopt.

More recently, the HDR10+ GAMING [PDF warning] standard was announced, because we needed more standards in HDR land. It was created in an attempt to introduce even more reasons for developers and players to use HDR10+. It uses Source Side Tone Mapping for more accurate game output to compatible displays, and also includes automated HDR calibration.

If you’re a video or film enthusiast buying based on image quality and content already graded, Dolby Vision might be of more interest though. Netflix for example is particularly interested in Dolby Vision, with almost all their internally produced content supporting it. Vudu and Amazon also offer Dolby Vision content.

In case you’re wondering, there are other HDR standards: HLG, or Hybrid Log Gamma, developed by the BBC and used on YouTube; and Advanced HDR by Technicolor, used largely in Europe, which allows playback of HDR content on SDR display via tricks with gamma curves.

So what is DisplayHDR then?

DisplayHDR is a specification from VESA that sets rules for what constitutes a good HDR experience, in relation to the hardware capable of displaying it. Each standard, from DisplayHDR 400 to DisplayHDR 1400, ensures suitably peak luminance, dynamic contrast ratios, colour gamut, and features like local dimming for a good HDR user experience.

Basically, monitors were being cited as HDR10 compliant without really delivering the hardware needed to make HDR10 content really pop. So VESA, a company that aims to create interoperable standards for electronics, stepped in with its own rules and regulations for its standard, which is used as a badge on compatible TVs and monitors to show their worth.

The DisplayHDR specifications are the best place to start if you’re just now looking into a compatible HDR gaming monitor. You can dig into the specifics from there, but knowing whether you want a DisplayHDR 1400 set or a DisplayHDR 600 set can really help narrow down your choices.

(Image credit: VESA)Is my graphics card HDR ready?

Almost definitely, yes. One place where PCs are already prepared for HDR is the graphics card market. While monitors often lag behind their TV counterparts in HDR implementation, GPUs have been ready for HDR for years now, thanks to the healthy rivalry between Nvidia and AMD.

Both AMD and Nvidia also have Variable Refresh Rate (VRR) technologies which are HDR compliant. Nvidia has G-Sync Ultimate with the loose promise of “lifelike HDR”, while AMD has Freesync Premium Pro with low latency SDR and HDR support. These are both the top tiers of Nvidia and AMD’s VRR technologies, so bear that in mind when looking into a potential HDR gaming monitor.

Are all games HDR ready?

Many new games offer support for HDR nowadays. Though while that’s true, older software won’t support the wider colour and contrast capabilities without patching. Those older games may play normally on HDR-equipped systems, but you won’t see any benefits without some fresh code added to the mix.

Fortunately, leveraging HDR’s superior technology doesn’t require a ground-floor software rewrite. A fairly straightforward mapping process that expands SDR colour maps to HDR ranges via algorithmic translation can be used to convert SDR titles without massive effort.

One way to do this is Windows’ Auto HDR feature, which actually takes SDR games and cleverly makes them HDR-ready.

Does Windows support HDR?

Yes, Windows 10 and Windows 11 support HDR content and will work with HDR10 capable screens. You may need to enable HDR in Settings, however. Just check out Settings > System > Display, select your HDR-ready display, and turn on Use HDR.

One thing to bear in mind, however, is that Windows and HDR don’t always mix well together. That includes when playing HDR compatible games. Some may require you to enable HDR in Windows to get the full HDR experience in-game, while others won’t. Microsoft would rather you have HDR on in Windows and the game play nicely with that, but you might have to fiddle around a little bit in order to get it looking good.

As previously mentioned, Windows also offers Auto HDR. This “will take DirectX 11 or DirectX 12 SDR-only games and intelligently expand the color/brightness range up to HDR,” Microsoft says.

You can enable it by flicking the switch just below the Use HDR switch in Settings, and from there it should do everything for you. Neat.